Balancing innovation with sustainability: AI’s energy challenge

Artificial intelligence (AI) is fundamentally changing the technology landscape, revolutionizing everything from advanced cancer screening techniques in healthcare to driverless cars in transportation. However, the continued integration of AI across almost every industry brings a significant, often overlooked challenge: colossal energy consumption.

Consider generative AI, a modern form of machine learning that reportedly uses around 33 times more energy than machines running task-specific software. Its computations take place in sophisticated data centers around the world, and while immensely powerful, they are enormous energy consumers. According to The Verge, training and operating generative AI models like ChatGPT-3 requires nearly 1,300 megawatt-hours (MWh) of electricity, equivalent to the annual energy usage of approximately 130 U.S. homes. This stark reality underscores a critical issue: as the capabilities of artificial intelligence grow, so too does its demand for energy.

The surge in artificial intelligence applications across industries is driving an urgent need to rethink our outdated energy infrastructure. To sustainably support the power requirements of AI, transitioning to clean and renewable energy sources becomes not just a priority, but a necessity.

The rise and rise of AI

The concept of artificial intelligence dates back to the 1950s, when the term was first coined by John McCarthy. Through the mid-70s, AI flourished as computers advanced and became more accessible. The AI-subfield of machine learning was developed, with research into natural language processing and robotics following in the 1980s. The turn of the millennium would heed advancements in speech recognition, image classification, and natural language processing, laying the foundation for the AI we’ve come to utilize in our daily lives.

Today, AI technology has made leaps in its capabilities, and is now integrated into most day-to-day consumer products. Consider the likes of Apple. Its once most futuristic mobile integration, Siri, pales in comparison to the recently revealed Apple Intelligence, a multi-platform suite of generative AI capabilities for iOS. Its development demonstrates just one of the many ways tech giants are drawing on the expertise of language learning models (LLM) like ChatGPT-4, with Apple officially integrating it across the Apple Intelligence platform this fall.

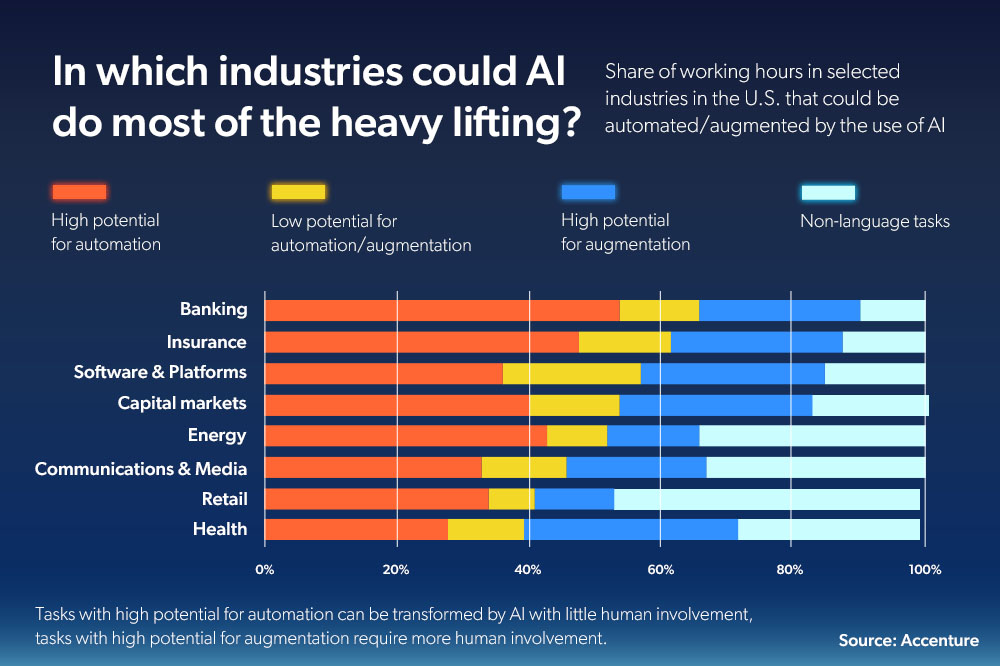

Industrially, too, AI promises to be transformative. From automotive companies to shipbuilders, manufacturers worldwide are embedding AI into their processes to create better safer products and reduce time-to-market, marking a significant shift in how industries operate.

AI is also making headway in the energy sector. According to the WEF, AI has the potential to develop clean energy technologies, optimize solar and wind farms, improve carbon processes, predict weather patterns, and catalyze novel breakthroughs in green energy sources like nuclear fusion. Modernized AI-powered smart grids could help solve the instability problem caused by aging infrastructure by learning from consumer usage patterns to better predict energy demands and avoid outages. Importantly, AI also promises to address the intermittent nature of renewable energy, optimizing energy storage systems (ESS) by forecasting energy production and consumption.

Powerful innovations are happening at the backend of AI, too, to keep up with these sizeable multi-sector developments. In pursuit of faster processing speeds and higher efficacies. Nvidia, a leading chip designer, recently unveiled a super chip that promises to accelerate AI learning at unprecedented speeds.

However, for all of its uses, AI commands an enormous amount of energy just to operate. So, what exactly is it about AI that requires so much power?

AI's insatiable appetite for power

According to Forbes, the reasons behind AI’s vast energy consumption are multifaceted. First, AI models are trained on an enormous number of datasets. While the models are being fed huge amounts of examples and data to “learn” from, GPUs — the electronic chip that processes large quantities of data — are running 24 hours a day. Training an AI model can take between a few minutes to a few months, depending on its complexity, leading to mounting energy consumption. And while GPUs have shown significant advancements over time, improvements in their energy efficiency are being outpaced by the ever-growing demand for more computational power.

The second reason is inference, which is the process of the AI model responding to a user’s query. Before it replies, first the AI must “understand” the question, then “think” of an answer. This also requires GPU processing power. The most widely adopted models like ChatGPT handle an estimated 10 million queries per day. With each query sent to ChatGPT costing an estimated 100 times more CO2 than a single Google search, you can imagine the cumulative impact of AI’s energy consumption — and that’s just from one AI model. Outside of LLMs, other AI-driven processes like image and video recognition, simulation, and autonomous systems like self-driving cars, drones, and robots further ramp up energy demand.

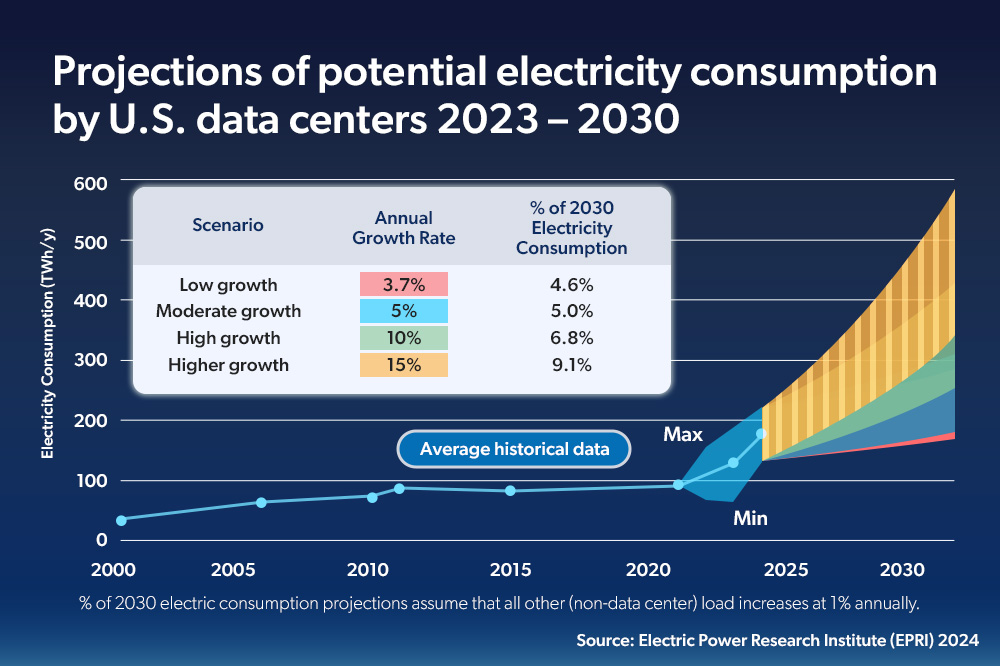

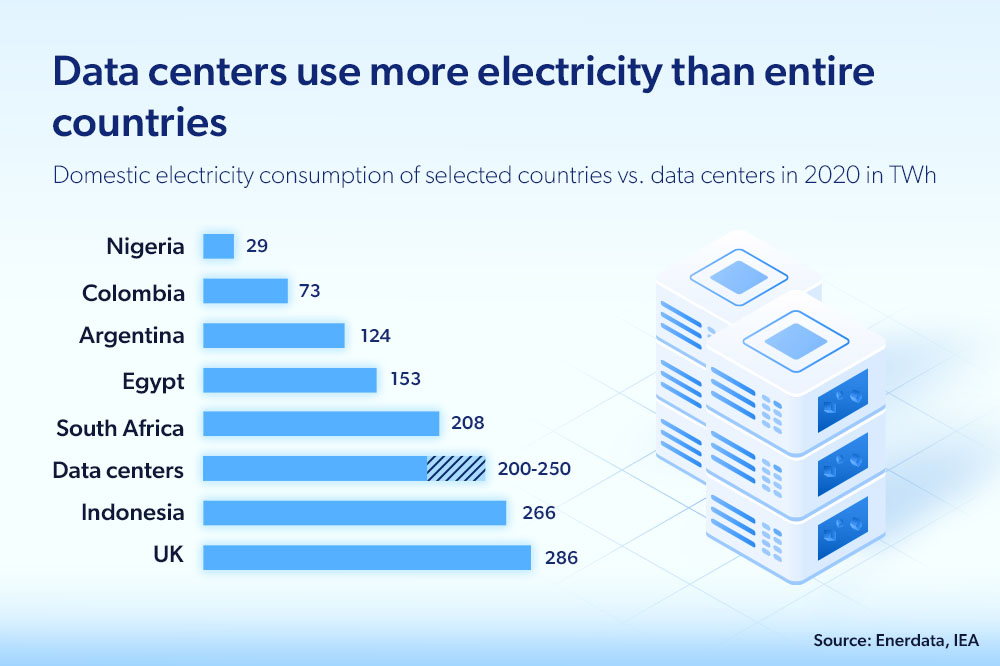

Many of the servers that power AI systems are housed in data centers around the U.S. Keeping up with AI’s power demands has made them into enormous energy consumers. While AI’s rapid innovation continues, it’s estimated that by 2027, data centers alone could use between 85 to 134 terawatt-hours (TWh) of electricity annually, comparable to the yearly energy consumption of entire countries like Argentina, the Netherlands, and Sweden. In 2030, data center power demand is projected to have grown by 160%, according to research by Goldman Sachs.

These unprecedented levels of energy consumption create an urgent need to incorporate clean energy technologies across the entirety of the AI ecosystem.

Toward AI’s eco-evolution

The sudden, rising energy demand from AI systems over the last few years has highlighted the need to ensure that it is powered by clean energy. One way to move toward green AI is by building eco-friendly data centers. The IEA predicts that global data center electricity demand will more than double from 2022 to 2026. Hanwha, a leading clean energy solutions provider, is leveraging synergy from its diverse portfolio spanning the entire value chain across solar, hydrogen, wind, and its construction business to build data centers with a low environmental impact to help manage this demand.

Having already completed nine data centers with a further two under construction, Hanwha Corporation Engineering & Construction Division has significant expertise in the field of green data center development. To ensure a more eco-friendly design from the outset, Hanwha is looking at ways to integrate its clean energy infrastructure into these data centers, such as installing high-efficiency solar panels on their rooftops. As well as taking power efficiency and reliable operation into consideration, Hanwha is examining innovative ways to minimize power consumption. One such method is by regenerating waste heat emitted by servers as usable power. By integrating this technology, Hanwha has been able to reduce the total annual power consumption of one of its data centers significantly, almost leveling it with the amount of energy used solely by the IT equipment inside.

Another method is to reduce the amount of power needed to maintain server temperatures effectively to avoid overheating. Hanwha has been studying the placement of data centers in areas with cooler temperatures, then employing natural air conditioning technology that moves cooler air from the outside in, reducing the power needed to keep cooling systems running. It is also exploring cutting-edge clean technologies like containment cooling, which will trap hot air in the thermal aisles of servers. In doing so, hot air is prevented from escaping into the servers where it mixes with cold air. Also, direct chip cooling, which reduces temperatures by passing cold water directly over data chips; and immersion cooling, which immerses servers directly in a special cooling fluid.

In addition to reducing the energy consumption of data centers through smart construction like Hanwha, some tech companies are investigating solutions like placing data centers underwater or close to offshore wind farms that can provide the base power needed for their uninterrupted operation. These initiatives can help ensure that AI is powered cleanly from the top of the supply chain, allowing for its rapid innovation to continue with less risk of AI impacting climate change goals.

AI holds tremendous potential to advance societies, driving innovation and efficiency across various sectors. While mounting industry and stakeholder recognition of AI’s energy consumption is encouraging, it is crucial to make the environmental challenges posed by the growing energy consumption of AI a higher priority. Going forward, industries must continuously strive to integrate cleaner energy solutions across the AI supply chain to ensure that as its rapid advancement continues, it is a boon and not a burden in the clean energy transition.

Get the latest news about Hanwha, right in your inbox.

Fields marked with * are mandatory.

- Non-employee

- Employee